Workflow App Optimisation

Qualitative and benchmarking analyses for application performance

My Role: User Research

December 2017 – August 2018

Project Overview

In this project I conducted basic user research to uncover insights affecting users of a banking system.

- Methods: User interviews, benchmarking

- Skills: Interviewing, data analysis, reporting

- Deliverables: Research plan, user survey, summary report

- Tools: WebEx, Stopwatch, Excel, Powerpoint

Opportunity

I was responsible for a workflow system used by over 3,000 staff in 40+ countries around the world. It processes time-sensitive documents for global trade financing.

Over several months, our users raised concerns about the system’s slow performance. Previous investigations failed to find meaningful solutions, so I set about identifying areas where the application needs to be improved.

Strategy

We needed to understand how the problem presented itself to users by using test data to find out:

- What activities were most impacted

- How system speeds varied around the world

Once we had the root causes and remedies, we would repeat the same set of tests and make comparisons.

Process

1. User Interviews

I selected and interviewed transaction processing users from the busiest centres in the UK, India and UAE to discover their frustrations with application performance. They also illustrated their problems over WebEx to give us areas to target.

- It often takes a long time to log into the application, especially in the mornings

- Waiting for the system to load and save transactions was frustrating

- The system felt slower at certain times of their day

- They felt anxiety when they fall behind in their processing backlogs as a result of system issues

2. Benchmarking

3. User Survey

4. Reporting Results

Deliverables

- Research plan

- Benchmark data

- User survey

- Summary presentation

Reflections

What would I keep doing?

- Using methods of research to create evidence as a basis to support arguments

- Listening to users and gaining their trust

What should I stop doing?

- Settling for the status quo by accepting the “it is what it is” mentality before the project started

What could I start doing?

- Seek opportunities that enable me to play to my strengths

What did I like about this project?

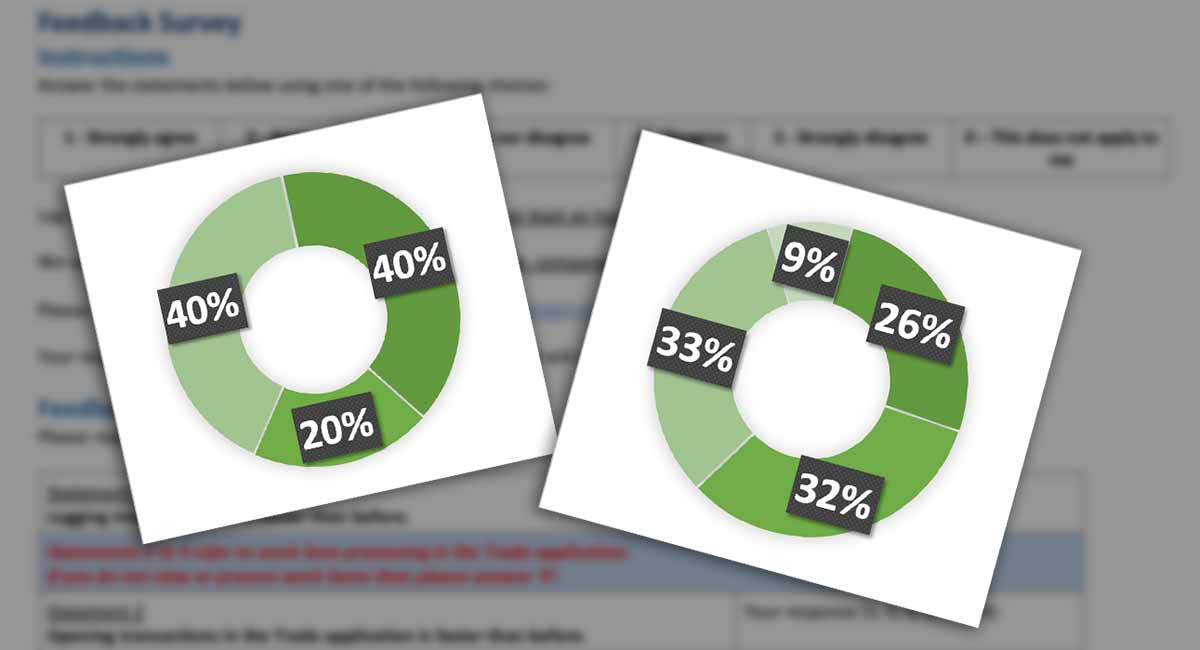

- Seeing how the simple act of using a stopwatch to record benchmarks led to an average 40% drop in global response times

- Receiving praises from users and stakeholders on the hands-on nature of our engagement

- Discovering how to play to my strengths in empathy and personal communication

What were the challenges in this project?

- The application team benefitted from instantaneous network speeds as they were geographically close to the system infrastructure

- Initial investigations by the local support teams were ineffective because their fast connections prevented them from experiencing the problems

DaveCheung

DaveCheung